1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

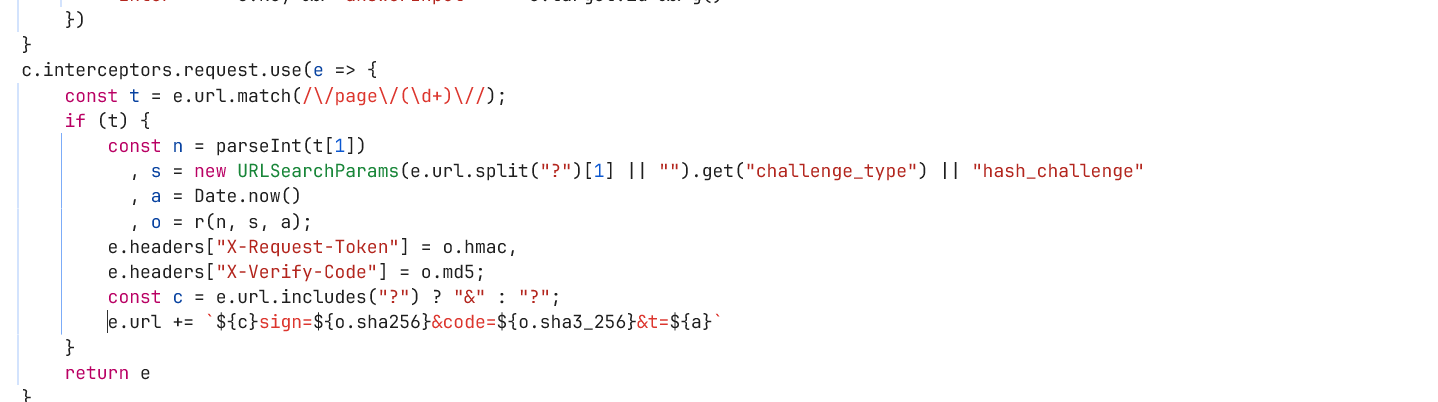

| import httpx

from loguru import logger

import numpy as np

headers = {

"accept": "application/json, text/javascript, */*; q=0.01",

"accept-language": "zh-CN,zh;q=0.9",

# "cache-control": "no-cache",

"pragma": "no-cache",

"priority": "u=1, i",

"referer": "https://www.spiderdemo.cn/sec1/header_check/",

"sec-ch-ua": "\"Chromium\";v=\"140\", \"Not=A?Brand\";v=\"24\", \"Google Chrome\";v=\"140\"",

"sec-ch-ua-mobile": "?0",

"sec-ch-ua-platform": "\"Windows\"",

"sec-fetch-dest": "empty",

"sec-fetch-mode": "cors",

"sec-fetch-site": "same-origin",

"user-agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/140.0.0.0 Safari/537.36",

"x-requested-with": "XMLHttpRequest"

}

cookies = {

"sessionid": ""

}

params = {

"challenge_type": "header_check"

}

sum = 0

for page in range(1, 101):

url = f"https://www.spiderdemo.cn/sec1/api/challenge/page/{page}/"

response = httpx.get(url, headers=headers, cookies=cookies, params=params)

page_data = response.json()['page_data']

logger.info(f"page: {page}, {page_data}")

page_data = np.array(page_data)

sum += page_data.sum()

logger.info(response)

logger.success(f"sum: {sum}")

|